Logistic Regression

model setup

-> ( = probability of the linear model True model of logistic regression: -

- π is the probability that an observation is in a specified category of the binary Y variable, generally called the “success probability.”

- The denominator of the model is (1 + numerator), so the answer will always be less than 1

- The numerator

must be positive, because it is a power of a positive value .

- π is the probability that an observation is in a specified category of the binary Y variable, generally called the “success probability.”

Model Estimation:

==recall: OLSE in MLR, find

-

= observe -

-> estimate -

(look class notes 10.03)

Problems with binary

- error

is also binary not normally distributed any more: - variance not constant ->OLS not optimal anymore

(from bernuli distribution)

- Constraints on response variable:

, - where linear function don’t keep this constraint

Model Estimation:

Given :

Coefficient Interpretation

Given:

Odds of success:

=>

- For numerical predictor:

- odds ratio of

- meeasure the changes in odds of success when

- e.g:

- odds ratio of

= 1.0097

when age increases by 1, the odds of obese increases by 0.97%.

case:

if

if

if

- see (class notes 10.05)

- For categorical Predictors: Given:

- when obs

- odds of success (

- odds of success reference = when we choose

example: (see class notes 10.05 page 10)

- Tests in logistic Regression:

- Wald test for individual coefficient

- basically a t test in MLR

- Deviane mel liklihood ratio test

- alternative method for F test in MLR

- Wald test for individual coefficient

Wald test for individual coefficient

Test stats:

rejection rule:

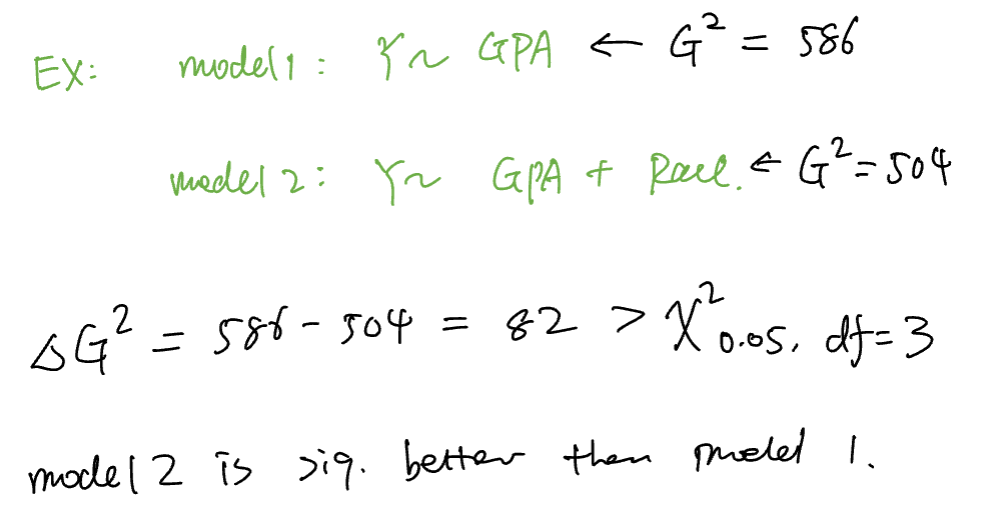

Deviance likelihood Ratio Test:

F test compute the reduction in SSE

(

rejection rule:

df =1

df =1

Model Diagnosis

- Multicolinearity

- stay the same for logistic regression(same as MLR)

- Influential points

- Not going to work:

- MOdel Assumptions:

- Heteroscedasticity

- Nomality

- MOdel Assumptions:

Influential Points Detection

- Pearson Residuals 2:

rule:

- Deviance Residual

if

if

Rule :

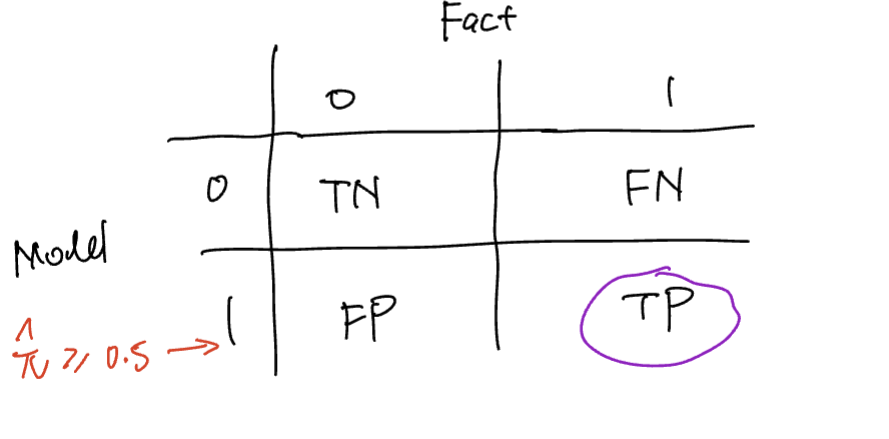

Confusion matrix:

more Souce : https://online.stat.psu.edu/stat462/node/207/